Abstract

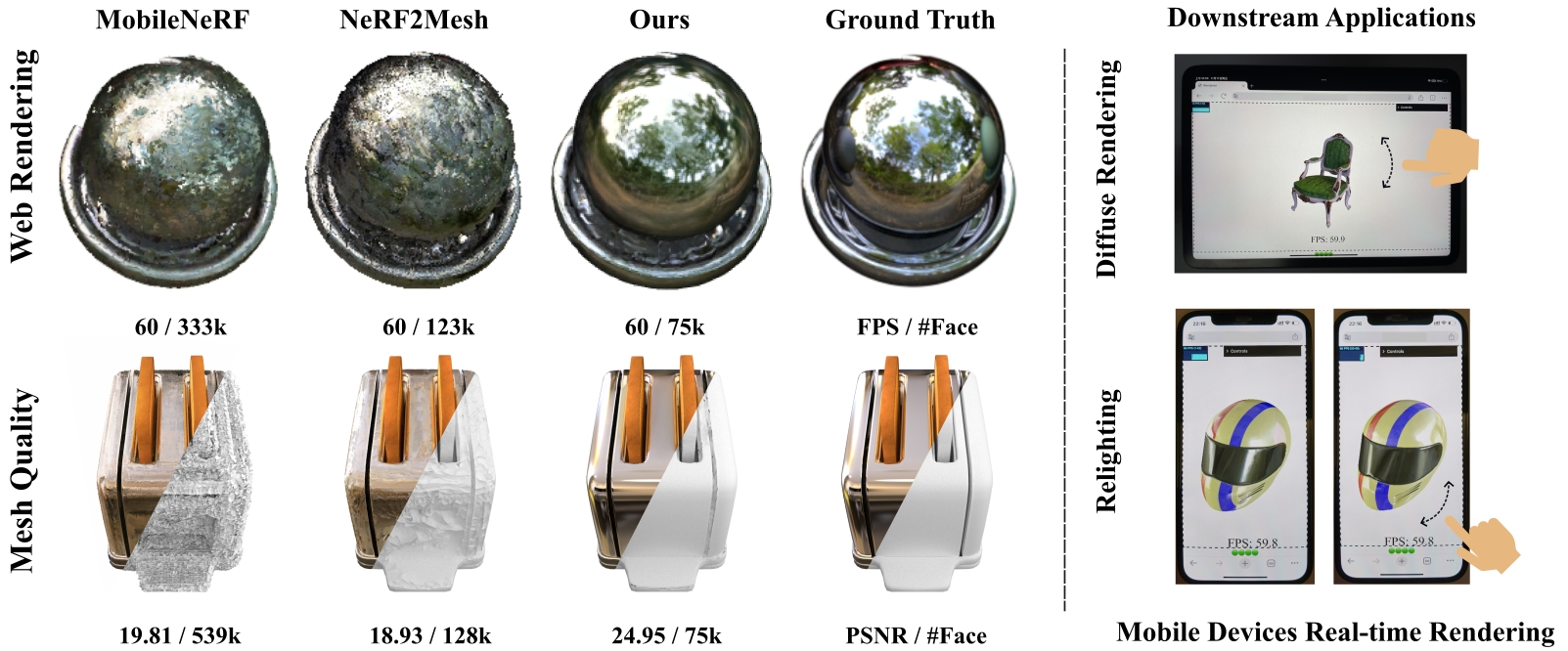

This work tackles the challenging task of achieving real-time novel view synthesis for reflective surfaces across various scenes. Existing real-time rendering methods, especially those based on meshes, often have subpar performance in modeling surfaces with rich view-dependent appearances. Our key idea lies in leveraging meshes for rendering acceleration while incorporating a novel approach to parameterize view-dependent information. We decompose the color into diffuse and specular, and model the specular color in the reflected direction based on a neural environment map. Our method achieves comparable reconstruction quality for highly reflective surfaces compared to state-of-the-art offline methods, while also efficiently enabling real-time rendering on edge devices such as smartphones.

Chair

Chair  Drums

Drums  Ficus

Ficus  Hotdog

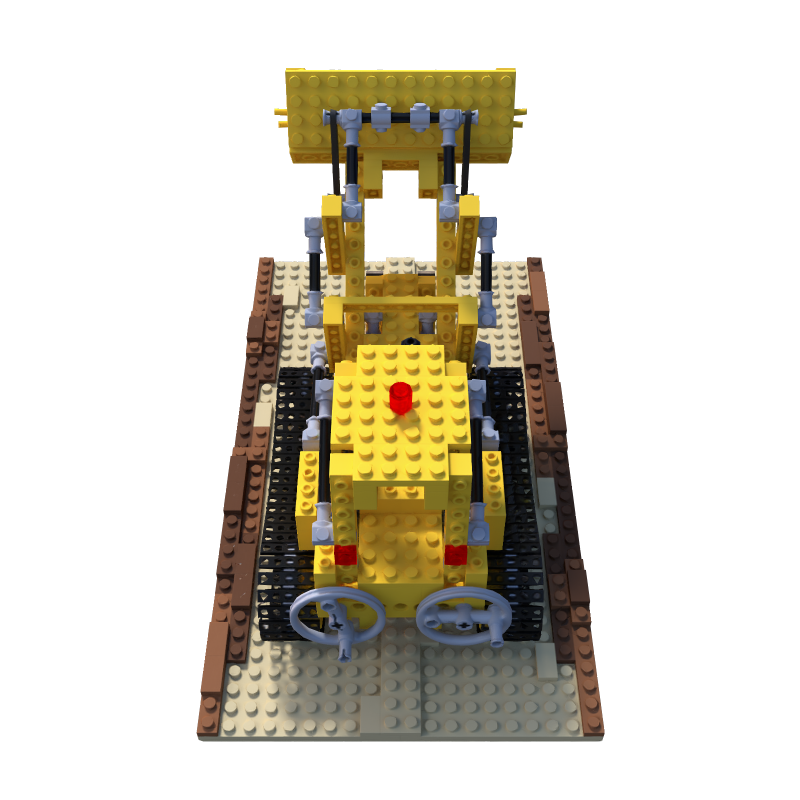

Hotdog  Lego

Lego  Materials

Materials  Mic

Mic  Ship

Ship  Gardenspheres

Gardenspheres  Toycar

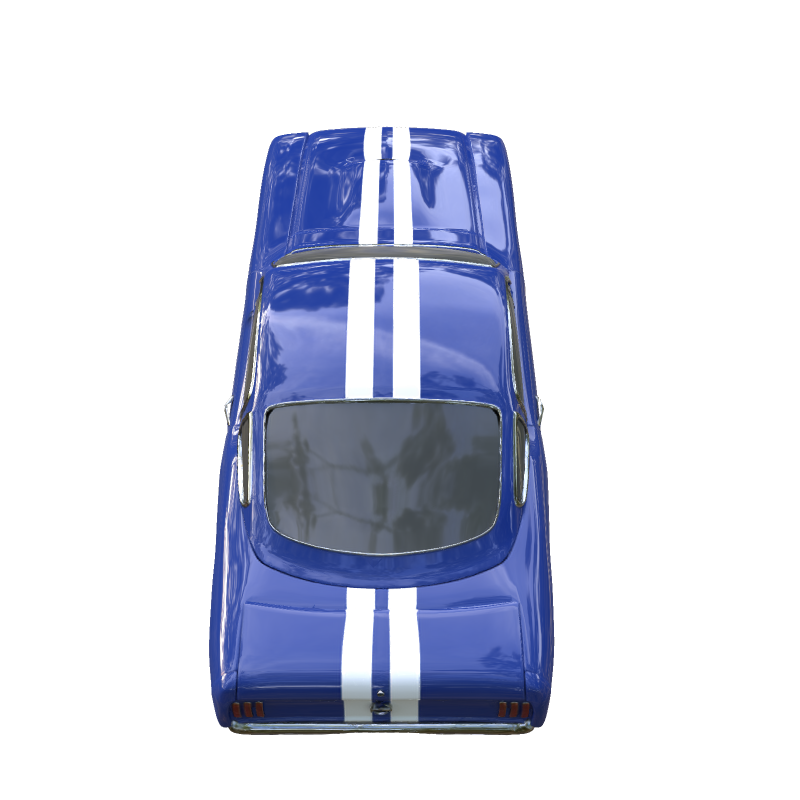

Toycar  Sedan(Coming Soon)

Sedan(Coming Soon)